After you've fine-tuned a model, you may want to test its quality via the Chat Completions API or the Evaluations service.

A Developer Tier deployment allows you to deploy your new model without the hourly hosting fee incurred by Standard or Global deployments. The only charges incurred are per-token. Consult the pricing page for the most up-to-date pricing.

Important

Developer Tier offers no availability SLA and no data residency guarantees. If you require an SLA or data residency, choose an alternative deployment type for testing your model.

Developer Tier deployments have a fixed lifetime of 24 hours. Learn more below about the deployment lifecycle.

Deploy your fine-tuned model

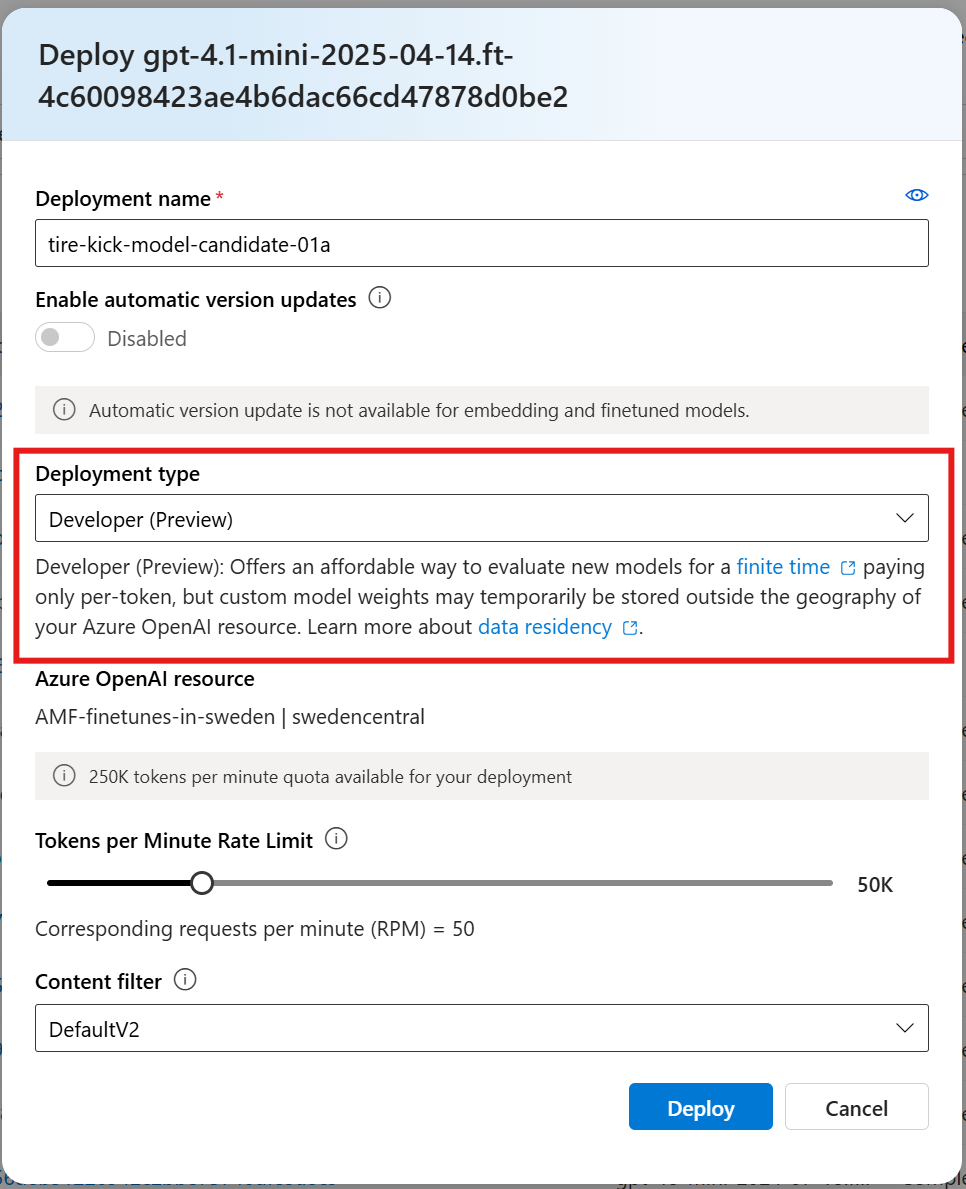

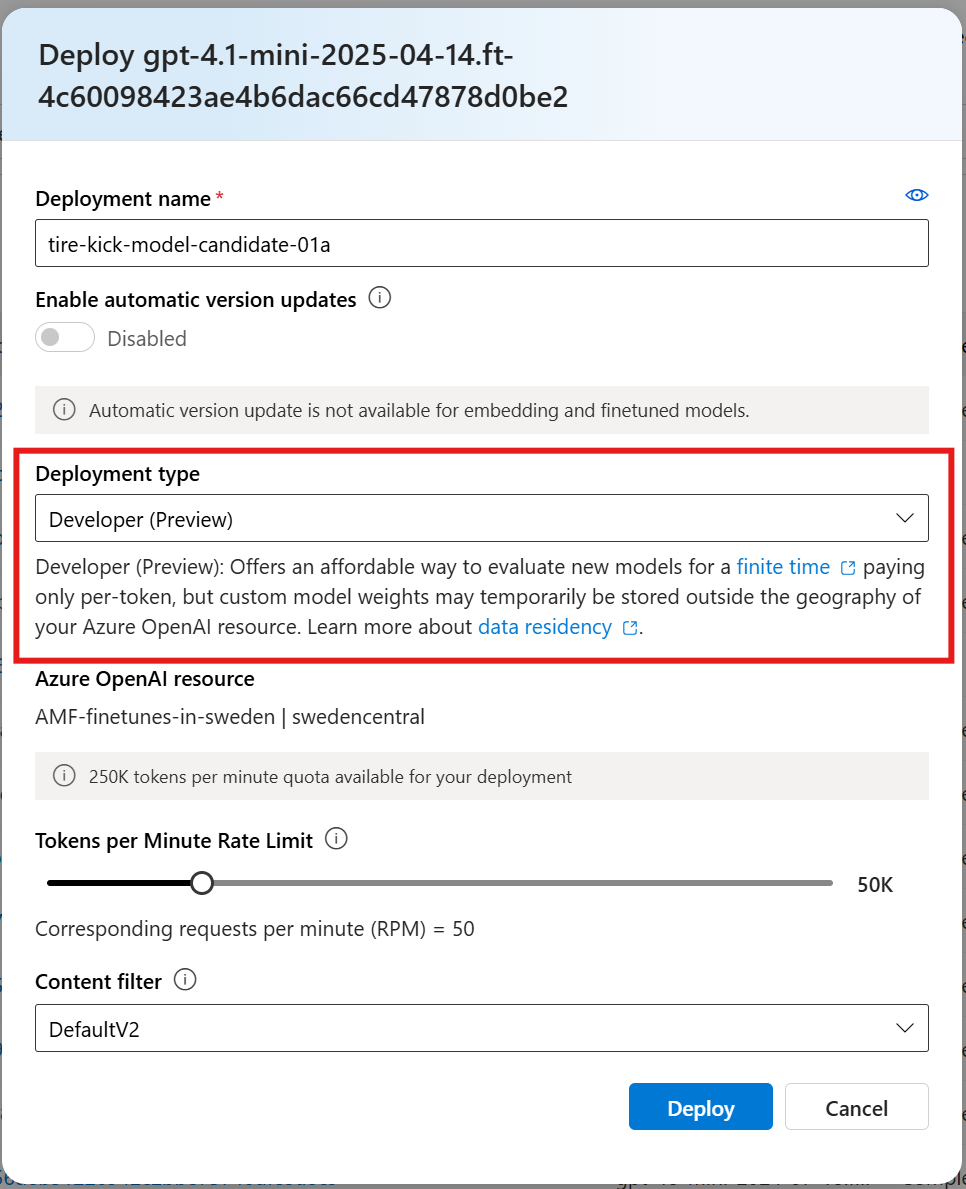

To deploy your model candidate, select the fine-tuned model to deploy, and then select Deploy.

The Deploy model dialog box opens. In the dialog box, enter your Deployment name and then select Developer from the deployment type drop-down. Select Create to start the deployment of your custom model.

You can monitor the progress of your new deployment on the Deployments pane in Azure AI Foundry portal.

import json

import os

import requests

token = os.getenv("<TOKEN>")

subscription = "<YOUR_SUBSCRIPTION_ID>"

resource_group = "<YOUR_RESOURCE_GROUP_NAME>"

resource_name = "<YOUR_AZURE_OPENAI_RESOURCE_NAME>"

model_deployment_name = "gpt41-mini-candidate-01" # custom deployment name that you will use to reference the model when making inference calls.

deploy_params = {'api-version': "2024-10-21"}

deploy_headers = {'Authorization': 'Bearer {}'.format(token), 'Content-Type': 'application/json'}

deploy_data = {

"sku": {"name": "developer", "capacity": 50},

"properties": {

"model": {

"format": "OpenAI",

"name": <"fine_tuned_model">, #retrieve this value from the previous call, it will look like gpt41-mini-candidate-01.ft-b044a9d3cf9c4228b5d393567f693b83

"version": "1"

}

}

}

deploy_data = json.dumps(deploy_data)

request_url = f'https://management.azure.com/subscriptions/{subscription}/resourceGroups/{resource_group}/providers/Microsoft.CognitiveServices/accounts/{resource_name}/deployments/{model_deployment_name}'

print('Creating a new deployment...')

r = requests.put(request_url, params=deploy_params, headers=deploy_headers, data=deploy_data)

print(r)

print(r.reason)

print(r.json())

| variable |

Definition |

| token |

There are multiple ways to generate an authorization token. The easiest method for initial testing is to launch the Cloud Shell from the Azure portal. Then run az account get-access-token. You can use this token as your temporary authorization token for API testing. We recommend storing this in a new environment variable. |

| subscription |

The subscription ID for the associated Azure OpenAI resource. |

| resource_group |

The resource group name for your Azure OpenAI resource. |

| resource_name |

The Azure OpenAI resource name. |

| model_deployment_name |

The custom name for your new fine-tuned model deployment. This is the name that will be referenced in your code when making chat completion calls. |

| fine_tuned_model |

Retrieve this value from your fine-tuning job results in the previous step. It will look like gpt41-mini-candidate-01.ft-b044a9d3cf9c4228b5d393567f693b83. You will need to add that value to the deploy_data json. Alternatively you can also deploy a checkpoint, by passing the checkpoint ID which will appear in the format ftchkpt-e559c011ecc04fc68eaa339d8227d02d |

The following example shows how to use the REST API to create a model deployment for your customized model. The REST API generates a name for the deployment of your customized model.

curl -X POST "https://management.azure.com/subscriptions/<SUBSCRIPTION>/resourceGroups/<RESOURCE_GROUP>/providers/Microsoft.CognitiveServices/accounts/<RESOURCE_NAME>/deployments/<MODEL_DEPLOYMENT_NAME>api-version=2024-10-21" \

-H "Authorization: Bearer <TOKEN>" \

-H "Content-Type: application/json" \

-d '{

"sku": {"name": "developer", "capacity": 50},

"properties": {

"model": {

"format": "OpenAI",

"name": "<FINE_TUNED_MODEL>",

"version": "1"

}

}

}'

| variable |

Definition |

| token |

There are multiple ways to generate an authorization token. The easiest method for initial testing is to launch the Cloud Shell from the Azure portal. Then run az account get-access-token. You can use this token as your temporary authorization token for API testing. We recommend storing this in a new environment variable. |

| subscription |

The subscription ID for the associated Azure OpenAI resource. |

| resource_group |

The resource group name for your Azure OpenAI resource. |

| resource_name |

The Azure OpenAI resource name. |

| model_deployment_name |

The custom name for your new fine-tuned model deployment. This is the name that will be referenced in your code when making chat completion calls. |

| fine_tuned_model |

Retrieve this value from your fine-tuning job results in the previous step. It will look like gpt-35-turbo-0125.ft-b044a9d3cf9c4228b5d393567f693b83. You'll need to add that value to the deploy_data json. Alternatively you can also deploy a checkpoint, by passing the checkpoint ID which will appear in the format ftchkpt-e559c011ecc04fc68eaa339d8227d02d |

Deploy a model with Azure CLI

The following example shows how to use the Azure CLI to deploy your customized model. With the Azure CLI, you must specify a name for the deployment of your customized model. For more information about how to use the Azure CLI to deploy customized models, see az cognitiveservices account deployment.

To run this Azure CLI command in a console window, you must replace the following <placeholders> with the corresponding values for your customized model:

| Placeholder |

Value |

| <YOUR_AZURE_SUBSCRIPTION> |

The name or ID of your Azure subscription. |

| <YOUR_RESOURCE_GROUP> |

The name of your Azure resource group. |

| <YOUR_RESOURCE_NAME> |

The name of your Azure OpenAI resource. |

| <YOUR_DEPLOYMENT_NAME> |

The name you want to use for your model deployment. |

| <YOUR_FINE_TUNED_MODEL_ID> |

The name of your customized model. |

az cognitiveservices account deployment create

--resource-group <YOUR_RESOURCE_GROUP>

--name <YOUR_RESOURCE_NAME>

--deployment-name <YOUR_DEPLOYMENT_NAME>

--model-name <YOUR_FINE_TUNED_MODEL_ID>

--model-version "1"

--model-format OpenAI

--sku-capacity "50"

--sku-name "Developer"

Use your deployed fine-tuned model

After your custom model deploys, you can use it like any other deployed model. You can use the Playgrounds in the Azure AI Foundry portal to experiment with your new deployment. You can continue to use the same parameters with your custom model, such as temperature and max_tokens, as you can with other deployed models.

You can also use the Evaluations service to create and run model evaluations against your deployed model candidate as well as other model versions.

import os

from openai import AzureOpenAI

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version="2024-02-01"

)

response = client.chat.completions.create(

model="gpt41-mini-candidate-01", # model = "Custom deployment name you chose for your fine-tuning model"

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Does Azure OpenAI support customer managed keys?"},

{"role": "assistant", "content": "Yes, customer managed keys are supported by Azure OpenAI."},

{"role": "user", "content": "Do other Azure AI services support this too?"}

]

)

print(response.choices[0].message.content)

curl $AZURE_OPENAI_ENDPOINT/openai/deployments/<deployment_name>/chat/completions?api-version=2024-10-21 \

-H "Content-Type: application/json" \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-d '{"messages":[{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": "Does Azure OpenAI support customer managed keys?"},{"role": "assistant", "content": "Yes, customer managed keys are supported by Azure OpenAI."},{"role": "user", "content": "Do other Azure AI services support this too?"}]}'

Clean up your deployment

Developer deployments will auto-delete on their own regardless of activity. Each deployment has a fixed lifetime of 24 hours after which it is subject to removal. The deletion of a deployment doesn't delete or affect the underlying customized model and the customized model can be redeployed at any time.

To delete a deployment manually, you can use the Azure AI Foundry portal or use Azure CLI.

To use the Deployments - Delete REST API send an HTTP DELETE to the deployment resource. Like with creating deployments, you must include the following parameters:

- Azure subscription ID

- Azure resource group name

- Azure OpenAI resource name

- Name of the deployment to delete

Below is the REST API example to delete a deployment:

curl -X DELETE "https://management.azure.com/subscriptions/<SUBSCRIPTION>/resourceGroups/<RESOURCE_GROUP>/providers/Microsoft.CognitiveServices/accounts/<RESOURCE_NAME>/deployments/<MODEL_DEPLOYMENT_NAME>api-version=2024-10-21" \

-H "Authorization: Bearer <TOKEN>"

Next steps