Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure Monitor managed service for Prometheus is intended to be a replacement for self-managed Prometheus so you don't need to manage a Prometheus server in your Kubernetes clusters. There may be scenarios though where you want to continue to use self-managed Prometheus in your Kubernetes clusters while also sending data to Managed Prometheus for long term data retention and to create a centralized view across your clusters. This may be a temporary solution while you migrate to Managed Prometheus or a long term solution if you have specific requirements for self-managed Prometheus.

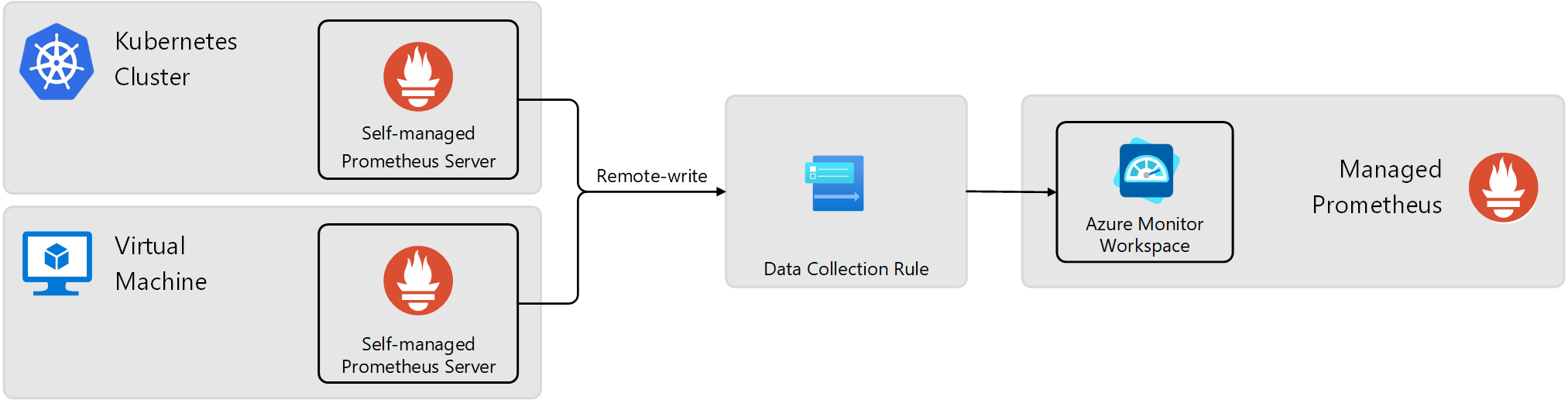

Architecture

Remote_write is a feature in Prometheus that allows you to send metrics from a local Prometheus instance to remote storage or to another Prometheus instance. Use this feature to send metrics from self-managed Prometheus running in your Kubernetes clusters or virtual machines to an Azure Monitor workspace used by Managed Prometheus.

The following diagram illustrates this configuration. A data collection rule (DCR) in Azure Monitor provides an endpoint for the self-managed Prometheus instance to send metrics and defines the Azure Monitor workspace where the data will be sent.

Authentication types

The configuration requirements for remote-write depend on the authentication type used to connect to the Azure Monitor workspace. The following table describes the supported authentication types. The details for each configuration are described in the following sections.

| Type | Clusters supported |

|---|---|

| System-assigned managed identity | Azure Kubernetes service (AKS) Azure VM/VMSS |

| User-assigned managed identity | Azure Kubernetes service (AKS) Arc-enabled Kubernetes Azure VM/VMSS |

| Microsoft Entra ID | Azure Kubernetes service (AKS) Arc-enabled Kubernetes cluster Cluster running in another cloud or on-premises Azure VM/VMSS Arc-enabled servers VM running in another cloud or on-premises |

Note

You can also use authentication with Microsoft Entra ID Workload Identity, but you must use a side car container to provide an abstraction for ingesting Prometheus remote write metrics and helps in authenticating packets. See Send Prometheus data to Azure Monitor using Microsoft Entra Workload ID authentication for configuration. |

Azure Monitor workspace

Your Azure Monitor workspace must be created before you can configure remote-write. This automatically enables Managed prometheus. If you don't already have one, see Manage an Azure Monitor workspace.

Create identity for authentication

Before you can configure remote-write, you must create the identity that you'll use to authenticate to the Azure Monitor workspace. The following sections describe how to create each type of identity if you aren't reusing an existing one.

You don't directly create a system-assigned managed identity but instead enable it for an Azure virtual machine or virtual machine scale set. For an Azure VM, you can enable the identity when you create the VM or enable it later from its Identity page in the Azure portal. For a VMSS, you must enable it after creation. For the different options to enable system-managed identity, see Configure managed identities on Azure virtual machines (VMs) and Configure managed identities for Azure resources on a virtual machine scale set.

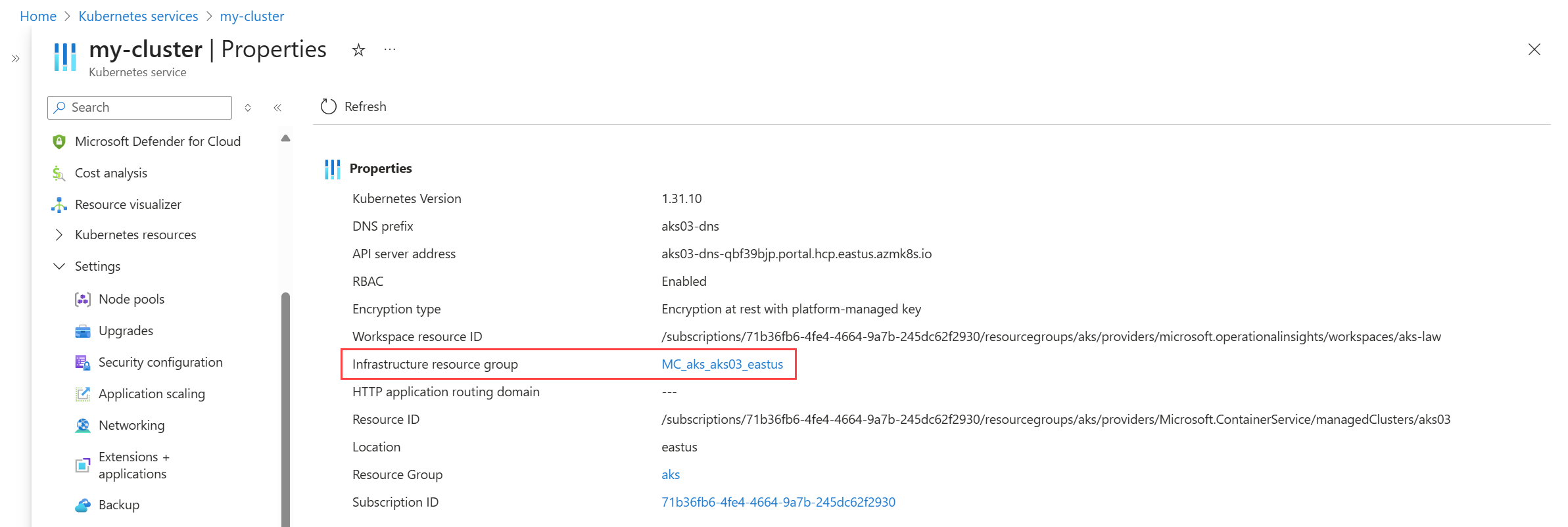

For an AKS cluster, the managed identity must be assigned to virtual machine scale sets in the cluster. AKS creates a resource group that contains the virtual machine scale sets. Access this resource group from the Properties page in the cluster's menu in the Azure portal. Click on Infrastructure Resource Group to view list the resources in this resource group. You must enable system managed identity for each virtual machine scale set in the resource group.

Assign roles

Once the identity that you're going to use is created, it needs to be given access to the data collection rule (DCR) associated with the Azure Monitor workspace that will receive the remote-write data. This DCR is automatically created when you create the workspace. You'll specify this identity in the remote-write configuration for the cluster or VM.

On the Azure Monitor workspace's overview pane, select the Data collection rule link. This opens the data collection rule (DCR) that is associated with the workspace.

On the page for the data collection rule, select Access control (IAM). Select Add and then Add role assignment.

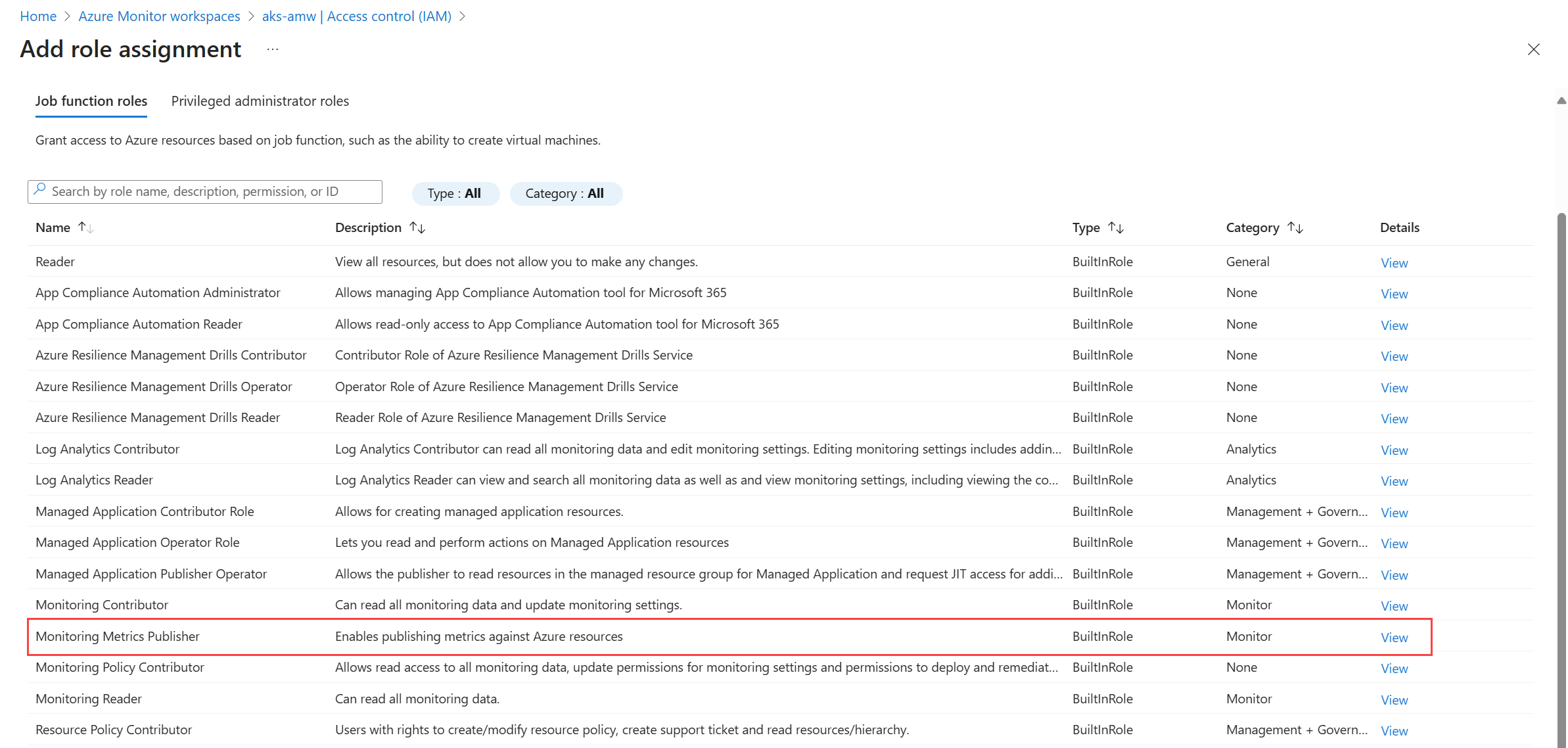

Select the Monitoring Metrics Publisher role, and then select Next.

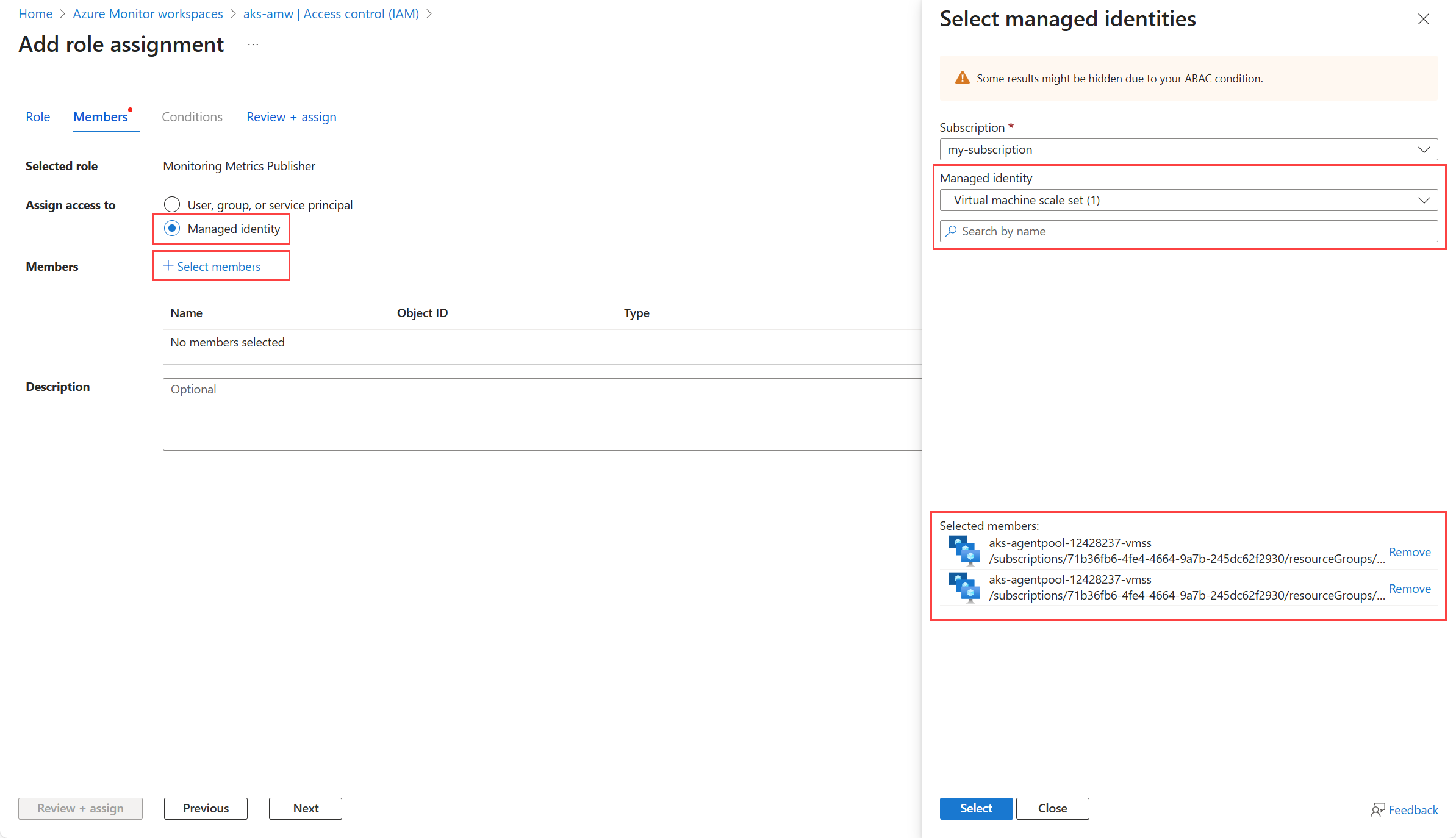

Select the identity to be assigned the role.

- For system-assigned managed identity, select Managed identity and then Select members. In the Managed identity dropdown, select the VM/VMSS or each of the VMSS in the AKS cluster.

- For user-assigned managed identity, select User-assigned managed identity section. Select the identity that you created.

- For Entra ID, select User, group, or service principal, and then Select members. Select the application that you created, and then choose Select.

Select Select to confirm your selection and then Review + assign to complete the role assignment.

Configure remote-write in configuration file

The final step is to add remote write to the configuration file for your self-managed Prometheus server. In addition to details for the identity that you created, you'll also need the metrics ingestion endpoint for the Azure Monitor workspace. Get this value from the Overview page for your Azure Monitor workspace in the Azure portal.

The remote-write section of the Prometheus configuration file will look similar to the following example, depending on the authentication type that you're using.

Managed identity

remote_write:

- url: "<metrics ingestion endpoint for your Azure Monitor workspace>"

azuread:

cloud: 'AzurePublic' # Options are 'AzurePublic', 'AzureChina', or 'AzureGovernment'.

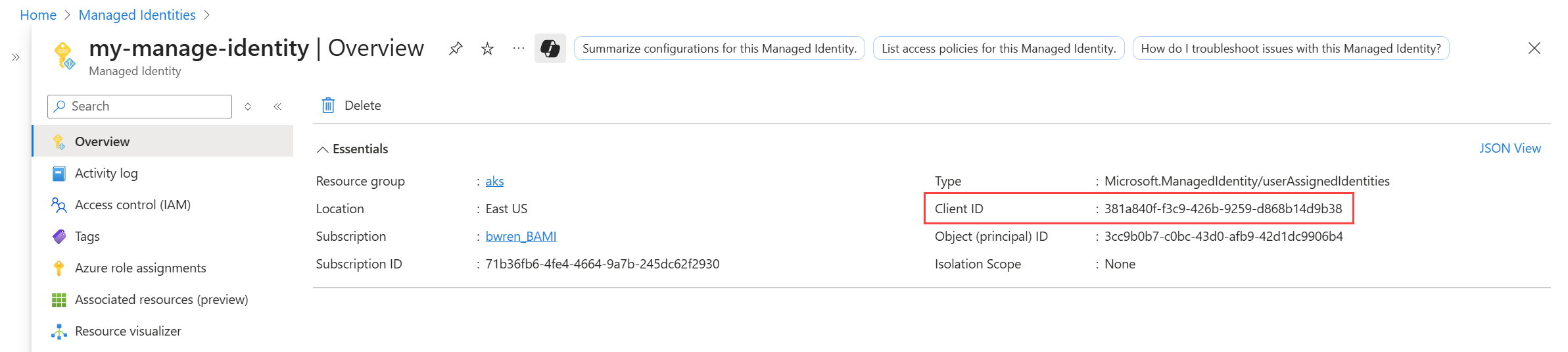

managed_identity:

client_id: "<client-id of the managed identity>"

Entra ID

remote_write:

- url: "<metrics ingestion endpoint for your Azure Monitor workspace>"

azuread:

cloud: 'AzurePublic' # Options are 'AzurePublic', 'AzureChina', or 'AzureGovernment'.

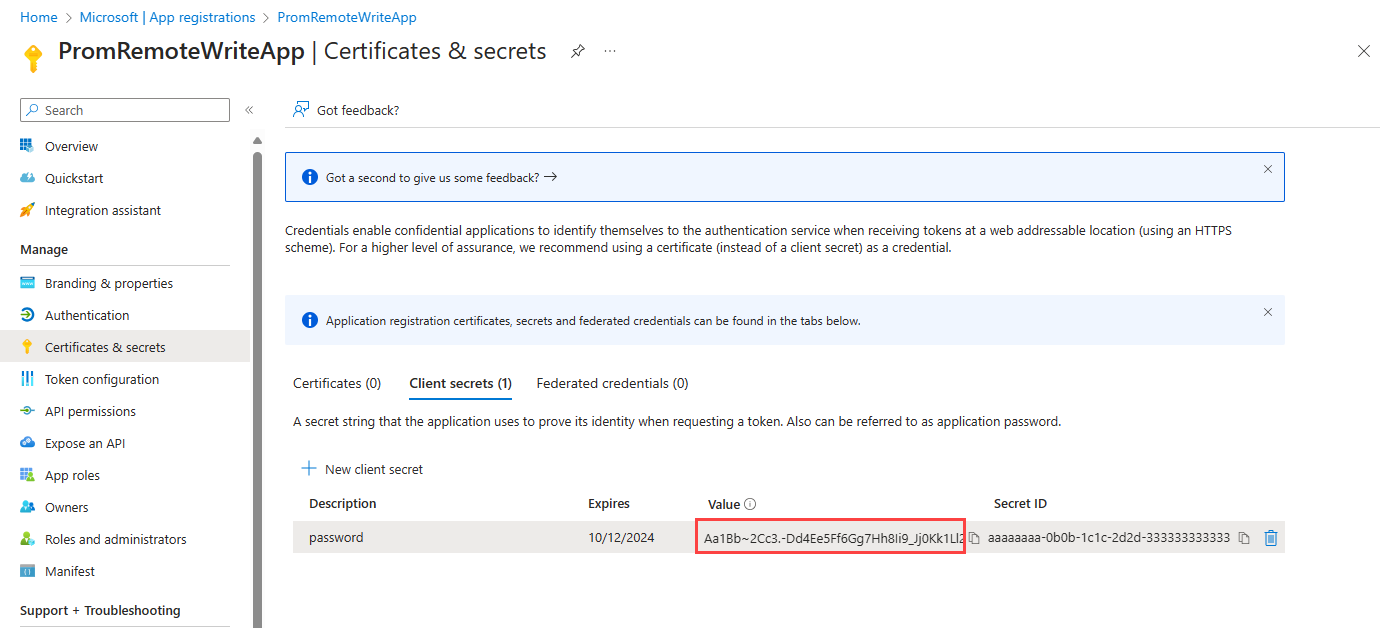

oauth:

client_id: "<client-id from the Entra app>"

client_secret: "<client secret from the Entra app>"

tenant_id: "<Azure subscription tenant Id>"

Apply configuration file updates

Virtual Machine

For a virtual machine, the configuration file will be promtheus.yml unless you specify a different one using prometheus --config.file <path-to-config-file> when starting the Prometheus server.

Kubernetes cluster

For a Kubernetes cluster, the configuration file is typically stored in a ConfigMap. Following is a sample ConfigMap that includes a remote-write configuration using managed identity for self-managed Prometheus running in a Kubernetes cluster.

GNU nano 6.4

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-server-conf # must match what your pod mounts

namespace: monitoring # adjust to your namespace

data:

prometheus.yml: |-

global:

scrape_interval: 15s

evaluation_interval: 15s

external_labels:

cluster: "aks11"

scrape_configs:

- job_name: "prometheus"

static_configs:

- targets: ["localhost:9090"]

remote_write:

- url: "https://aks-amw-0mi2.eastus-1.metrics.ingest.monitor.azure.com/dataCollectionRules/dcr-00000000000000000000000000000000/streams/Microsoft-PrometheusMetrics/api/v1/write?api-version=2023-04-24"

azuread:

cloud: 'AzurePublic'

managed_identity:

client_id: "00001111-aaaa-2222-bbbb-3333cccc4444"

Use the following command to apply the configuration file updates.

kubectl apply -f <configmap-file-name>.yaml

Restart Prometheus to pick up the new configuration. If you are using a deployment, you can restart the pods by running the following command.

kubectl -n monitoring rollout restart deploy <prometheus-deployment-name>

Release notes

For detailed release notes on the remote write side car image, please refer to the remote write release notes.

Troubleshoot

HTTP 403 error in the Prometheus log

It takes about 30 minutes for the assignment of the role to take effect. During this time, you may see an HTTP 403 error in the Prometheus log. Check that you have configured the managed identity or Microsoft Entra ID application correctly with the Monitoring Metrics Publisher role on the workspace's DCR. If the configuration is correct, wait 30 minutes for the role assignment to take effect.

No Kubernetes data is being collected

If data isn't being collected in Managed Prometheus, run the following command to find errors in the remote write container.

kubectl --namespace <Namespace> describe pod <Prometheus-Pod-Name>

Container restarts repeatedly

A container regularly restarting is likely due to misconfiguration of the container. Run the following command to view the configuration values set for the container. Verify the configuration values especially AZURE_CLIENT_ID and IDENTITY_TYPE.

kubectl get pod <Prometheus-Pod-Name> -o json | jq -c '.spec.containers[] | select( .name | contains("<Azure-Monitor-Side-Car-Container-Name>"))'

The output from this command has the following format:

{"env":[{"name":"INGESTION_URL","value":"https://my-azure-monitor-workspace.eastus2-1.metrics.ingest.monitor.azure.com/dataCollectionRules/dcr-00000000000000000/streams/Microsoft-PrometheusMetrics/api/v1/write?api-version=2021-11-01-preview"},{"name":"LISTENING_PORT","value":"8081"},{"name":"IDENTITY_TYPE","value":"userAssigned"},{"name":"AZURE_CLIENT_ID","value":"00000000-0000-0000-0000-00000000000"}],"image":"mcr.microsoft.com/azuremonitor/prometheus/promdev/prom-remotewrite:prom-remotewrite-20221012.2","imagePullPolicy":"Always","name":"prom-remotewrite","ports":[{"containerPort":8081,"name":"rw-port","protocol":"TCP"}],"resources":{},"terminationMessagePath":"/dev/termination-log","terminationMessagePolicy":"File","volumeMounts":[{"mountPath":"/var/run/secrets/kubernetes.io/serviceaccount","name":"kube-api-access-vbr9d","readOnly":true}]}

Data dropped due in high volume environments

The Data Collection Rule (DCR) and Data Collection Endpoint (DCE) for the Azure Monitor workspace are subject ingestion limits listed in Azure Monitor service limits. You're most subject to these limits when configuring remote write for multiple clusters sending data to the same endpoint.

Consider Remote write tuning to adjust configuration settings for better performance. If you still see data drops, consider creating additional DCRs and DCEs to distribute the ingestion load across multiple endpoints. This approach helps optimize performance and ensures efficient data handling. See Instructions on how to create custom Data collection endpoint(DCE) and custom Data collection rule(DCR) for an existing Azure monitor workspace(AMW) to ingest Prometheus metrics.