Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This article helps with planning to provision users from SAP HCM to Microsoft Entra ID, including architectural considerations for organizations migrating from SAP IDM to Microsoft Entra.

At a high level, this document describes three integration options for SAP HCM, including SAP HCM for SAP S/4HANA On-Premise:

- Option 1: CSV-file based inbound provisioning from SAP HCM

- Option 2: SAP BAPI-based inbound provisioning using the Azure Logic Apps SAP connector

- Option 3: SAP IDocs-based inbound provisioning using the Azure Logic Apps SAP connector

There's also a fourth option,

- Option 4: Organizations that use both SAP SuccessFactors and SAP HCM can also bring identities into Microsoft Entra ID, by using SAP Integration Suite to synchronize lists of workers between SAP HCM and SAP SuccessFactors. From there, you can bring identities for employees from SuccessFactors into Microsoft Entra ID or provision them from SuccessFactors into on-premises Active Directory, by using Microsoft Entra ID connectors.

Terminology

| Term | Definition |

|---|---|

| AS ABAP | SAP NetWeaver Application Server platform supporting Advanced Business Application Programming (ABAP) runtime. |

| SAP NetWeaver | SAP’s application server platform hosting on-premises SAP ERP applications, including SAP HCM, and providing integration capabilities. |

| BAPI | Business Application Programming Interface; enables external applications to access SAP business object data and processes. |

| IDocs | Intermediate Documents; standardized SAP format for exchanging business data between systems, consisting of control and data records for batch processing. |

| RFC | Remote Function Calls; SAP protocol for communication between SAP and external systems, supporting inbound and outbound function calls via RFC ports. |

| FM | Function Modules; BAPI function modules configured in SAP HCM. |

Architecture overview

SAP HCM Tables and Infotypes

Employee data in SAP HCM is stored in a SQL relational database. With access to the right function modules (FM) and permissions, it's possible to query the backend database tables storing employee information. For every table in the database, there's a functional equivalent in SAP HCM called Infotype, which is a mechanism to logically group related data. SAP HCM admins use Infotype terminology when managing employee data in SAP HCM screens. This section provides a list of important tables in SAP HCM storing employee data. The field Personnel number – PERNR uniquely identifies every employee in these tables.

| Table name | Infotype | Remarks |

|---|---|---|

| PA0000 | 0000- Actions | Captures actions performed by HR on the employee. Example fields: employment status, action type, action name, start date, end date. |

| PA0001 | 0001 – Employee Org assignments | Captures organization data for an employee. Example fields: company code, cost center, business area. |

| PA0002 | 0002 – Personal Data | Captures personal data. Example fields: first name, last name, date of birth, nationality. |

| PA0006 | 0006 – Addresses | Captures address and phone data. Example fields: street, city, country, phone. |

Example SQL query to fetch active employees’ username, status, first name, and last name:

SELECT DISTINCT p0.PERNR username, CASE WHEN (p0.STAT2 = 3) THEN 1 END statuskey, p2.NACHN last_name, p2.VORNA first_name

FROM SAP_PA0000 p0 left join SAP_PA0002 p2 on p0.PERNR = p2.PERNR

WHERE p0.STAT2 = 3 and p0.ENDDA > SYSDATE()

SAP HCM and Entra Inbound Provisioning options

This section describes options that SAP HCM customers can consider for implementing inbound provisioning from SAP HCM to Microsoft Entra / on-premises Active Directory. Use the following decision tree to determine which option to use.

Option 1: CSV-file based inbound provisioning

When to use this approach

Use this approach if you're using both SAP HCM and SAP SuccessFactors in side-by-side deployment mode, where SAP SuccessFactors isn't yet authoritative/operational as the primary HR data source. This approach provides faster time-to-value and aligns better with your objective of eventually moving to SAP SuccessFactors.

In what scenarios will a customer have deployed both SAP HCM and SAP SuccessFactors?

It’s common for you to start your SAP SuccessFactors deployment with ancillary HR modules like Performance and Goals, Learn, etc., before moving core HR modules to SAP SuccessFactors. In this scenario, the on-premises SAP HCM system continues to be the authoritative source for employee and organization data.

Why is this approach recommended only for SAP HCM customers with SAP SuccessFactors deployment plan?

Only customers with this side-by-side deployment configuration are eligible to use SAP’s Add-on Integration Module for SAP HCM and SuccessFactors that simplifies the periodic export of employee data into CSV files.

High-level data flow and configuration steps

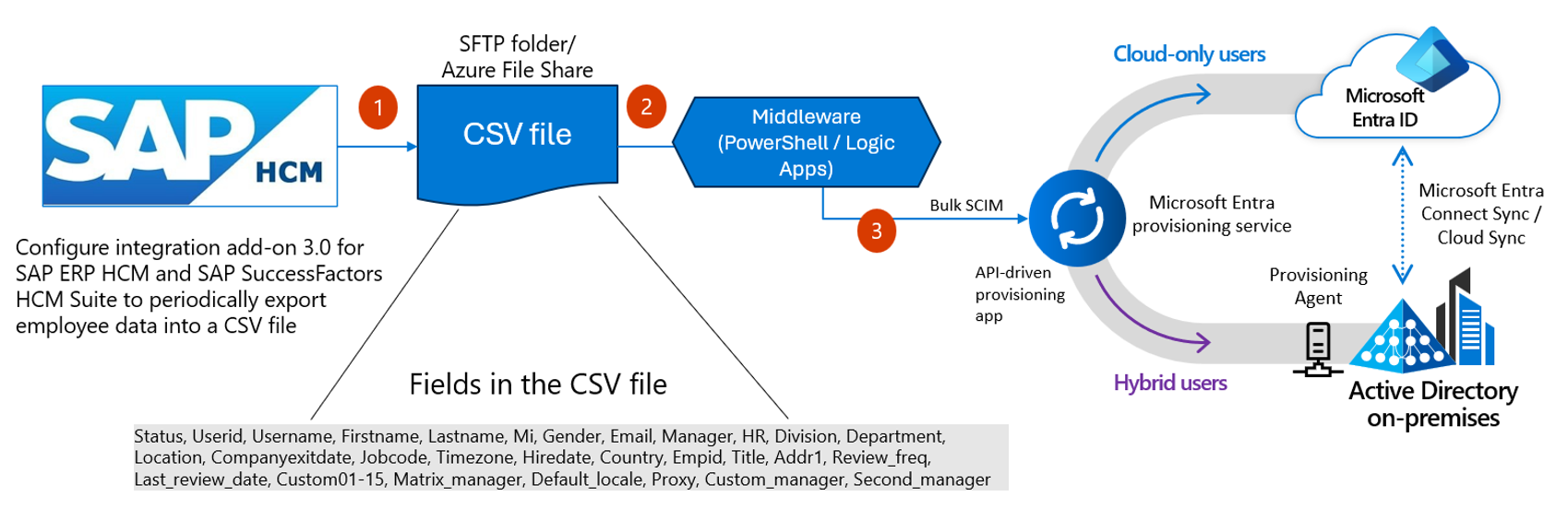

This diagram illustrates the high-level data flow and configuration steps.

- Step 1: In SAP HCM, configure periodic export of CSV files with employee data. When running the integration for the very first time, we recommend performing a full export for the initial sync. Once initial sync is complete, you can perform incremental exports that only capture changes. The exported CSV files can be stored on SFTP server or Azure File shares in encrypted format.

- References:

- Replicating employee data from SAP ERP HCM

- 2214465 - Integration Add-On 3.0 for SAP ERP HCM - SAP for Me (requires SAP support login)

- Slide deck explaining the integration between SAP ERP HCM and SuccessFactors, presented at the SBN Conference 2019. To download the presentation from the conference site, go to Day 1 > Wed 13:30-14:10 > Integration between SAP ERP HCM and SuccessFactors Trygve Berg, Capgemini.

Note

CSV Files can have delta (or incremental) data.

- References:

- Step 2: In Microsoft Entra, configure the API-driven provisioning app to receive employee data from SAP HCM.

- Step 3: Configure middleware tool to decrypt/read the CSV, convert it to SCIM bulk payload, then send the data to the API endpoint configured in Step 2. The CSV file can be stored in a staging ___location like Azure File Share. It's recommended to implement validation and circuit-breaking logic in the middleware tool to keep out bad data from flowing into Entra. For example, if

employeeTypeis invalid, then skip the record and if a certain percentage of HR records have invalid data, stop the bulk upload operation.

Deployment variations

If you don't have access to the SAP provided add-on integration module or don't plan to use SuccessFactors, even in this scenario, it’s still possible to use the CSV approach as you can build a custom automation in SAP HCM to export CSV files regularly for both full sync and incremental sync.

Option 2: SAP BAPI-based inbound provisioning

When to use this approach

Use this approach if you don't have SAP SuccessFactors deployed and the solution architecture calls for a scheduled periodic sync using Azure Logic Apps due to system constraints or deployment requirements. This integration uses the Azure Logic Apps SAP connector. Azure Logic Apps ships two connector types for SAP:

- SAP built-in connector, which is available only for Standard workflows in single-tenant Azure Logic Apps.

- SAP managed connector that's hosted and run in multitenant Azure. It’s available with both Standard and Consumption logic app workflows.

The SAP "built-in connector" has certain advantages over the managed connector” for the reasons documented in this article. For example, With the SAP built-in connector, on-premises connections don't require the on-premises data gateway and dedicated actions provide better experience for stateful BAPIs and RFC transactions.

High level data flow and configuration steps

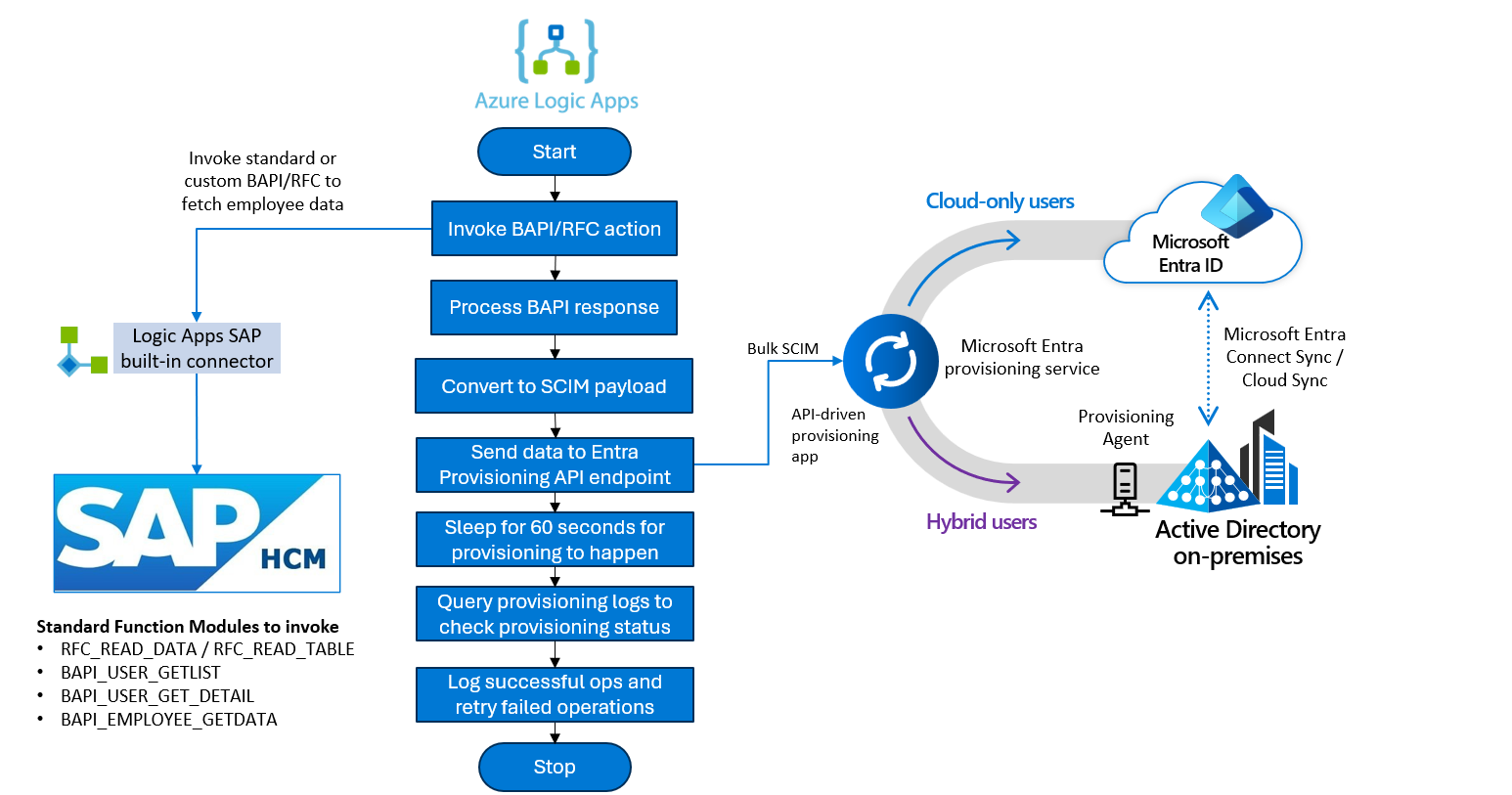

This diagram illustrates the high-level data flow and configuration steps for SAP BAPI-based inbound provisioning.

Note

The diagram depicts the deployment components for the Azure Logic Apps SAP built-in connector.

Networking considerations:

- If you prefer using the Azure Logic Apps SAP managed connector, then consider using Azure Express Route that permits peering of Logic App Standard network with that of on-premises SAP deployment. The on-premises data gateway component isn't recommended as it leads to diminished security.

- If the SAP HCM system is already running on Azure, the connection from Logic Apps to your SAP system can be done in the same VNET (without the need for on-premises data gateway).

Step 1: Configure prerequisites in SAP HCM to use the SAP built-in connector. This includes setting up an SAP system account with appropriate authorizations to invoke the following BAPI function modules. The

RPY*andSWO*function modules enable you to use the dedicated BAPI actions that allow listing the available business objects and discovering which ABAP methods are available to act upon these objects. For better discoverability and more specific metadata for the input-output, we recommend this over direct call of the RFC implementation of the BAPI method.RFC_READ_DATARFC_READ_TABLEBAPI_USER_GETLISTBAPI_USER_GET_DETAILBAPI_EMPLOYEE_GETDATARFC_METADATARFC_METADATA_GETRFC_METADATA_GET_TIMESTAMP

RPY_BOR_TREE_INITSWO_QUERY_METHODSSWO_QUERY_API_METHODS

If you have defined custom function modules, then those should be included in the list.

Step 2: In Microsoft Entra, configure the API-driven provisioning app to receive employee data from SAP HCM.

Step 3: Configure a logic app workflow that invokes the appropriate BAPI function modules preferably via BAPI call method in SAP, processes the response, builds a SCIM payload and sends the response to the Microsoft Entra provisioning API endpoint. As a best practice to stay within API-driven provisioning usage limits, we recommend batching multiple changes into a single SCIM bulk request rather than submitting one SCIM bulk request for each change.

Step 4: Query the Entra ID provisioning logs endpoints to check the status of the provisioning operation. Record successful operations and retry failures.

Define a custom RFC for delta imports

Use these steps to create a custom RFC in the SAP GUI/ABAP Workbench. This custom RFC returns attributes of users that have changed between a specific start date and end date.

Define the RFC Function Module

a. Use transaction SE37 to create a custom RFC-enabled function module that accepts as input a start date (

p_begda) and an end date (p_endda)

b. In the function module, write logic to query the relevant tables (Example, PA0001, PA0002) for user attributes.

c. Filter the data based on the timestamp of the last change (Example, using theCHANGED_ON field).Implement the Logic

a. Use ABAP code to fetch the required attributes and apply the filter for changes within the last hour.

b. Example ABAP snippet:FORM read_database USING p_begda p_endda. IF lv_pernr IS INITIAL. *-- > Employee list SELECT pernr endda begda FROM pa0000 INTO TABLE 1t_pernr WHERE aedtm BETWEEN p_begda AND p_endda. *-- > Org assignment details SELECT pernr endda begda FROM pa0001 APPENDING TABLE 1t_pernr WHERE aedtm BETWEEN p_begda AND p_endda. *-- > Personal Details SELECT pernr endda begda FROM pa0002 APPENDING TABLE 1t_pernr WHERE aedtm BETWEEN p_begda AND p_endda. ENDIF. ENDFORM.c. Ensure the function module returns the data in a structured format (Example, internal table

1t_pernr).Enable RFC Access

a. Mark the function module as RFC-enabled in its attributes.

b. Test the RFC using transaction SM59 to ensure it can be called remotely.Test and Deploy

a. Test the RFC locally and remotely to verify its functionality.

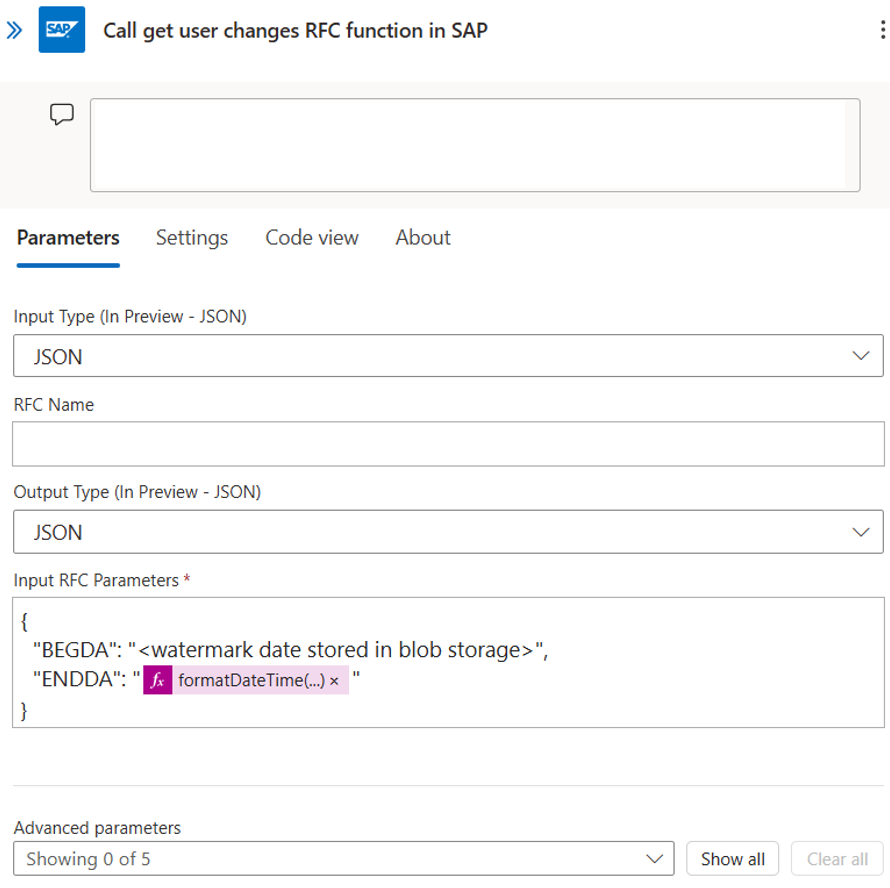

b. Deploy the RFC in your ECC system and document its usage.Configure RFC call in Logic Apps

a. Specify the SAP system details, including the RFC name you created in SAP ECC.

b. Input any required parameters for the RFC (for example, in the previous screenshot, the parameter BEGDA points to a watermark date stored in Azure Blob Storage corresponding to the previous run, while ENDDA is the start date of the current logic app run.).

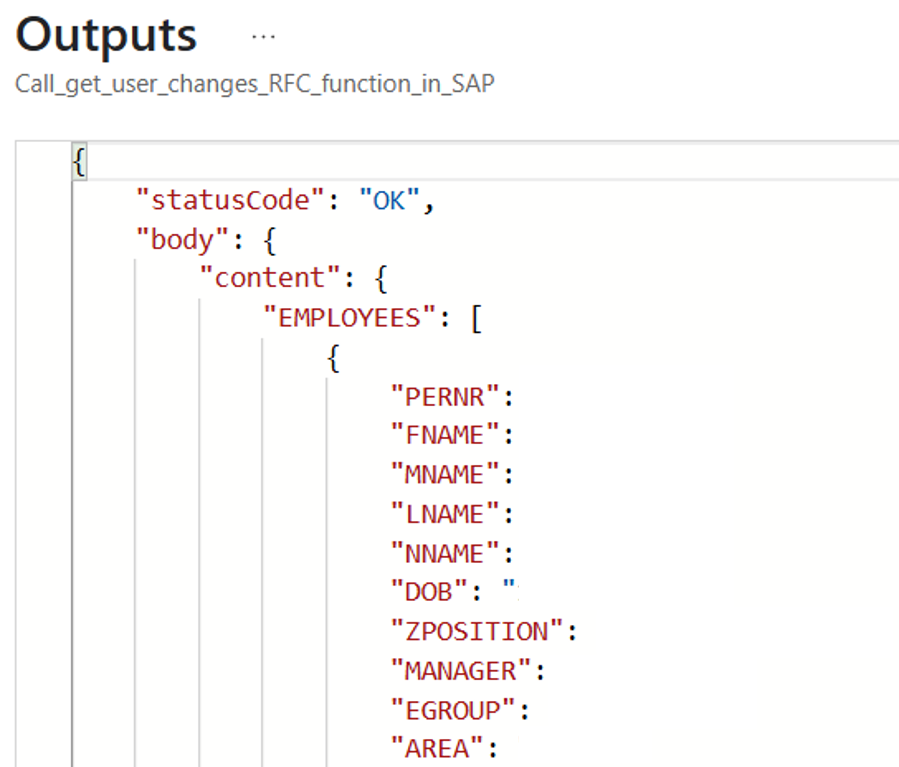

b. Input any required parameters for the RFC (for example, in the previous screenshot, the parameter BEGDA points to a watermark date stored in Azure Blob Storage corresponding to the previous run, while ENDDA is the start date of the current logic app run.).Parse the JSON Response from the RFC Call and use it to create the SCIM bulk request payload.

Option 3: SAP IDocs-based inbound provisioning

When to use this approach

Use this approach if you don't have SAP SuccessFactors deployed and the solution architecture calls for event-based sync using Azure Logic Apps due to system constraints or deployment requirements.

This integration uses the Azure Logic Apps SAP Connector. Azure Logic Apps ships two connector types for SAP:

- SAP built-in connector, which is available only for Standard workflows in single-tenant Azure Logic Apps.

- SAP managed connector, which is hosted and run in multitenant Azure. It’s available with both Standard and Consumption logic app workflows.

The SAP built-in connector has certain advantages over the managed connector for the reasons documented in this article. For example, with the SAP built-in connector, on-premises connections don't require the on-premises data gateway, it supports IDoc deduplication and has better support for handling IDoc file formats in the trigger when a message is received.

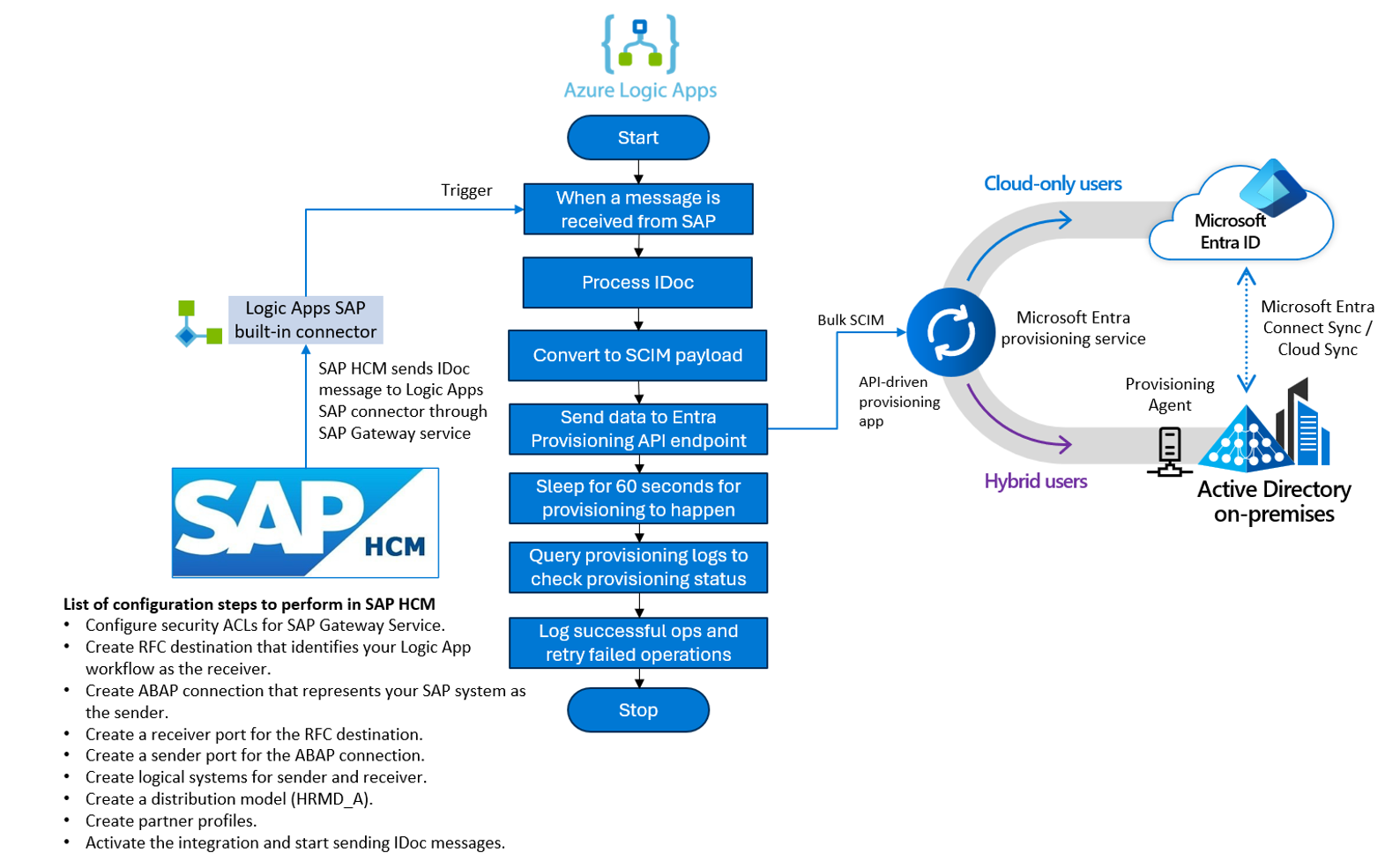

High level data flow and configuration steps

This diagram illustrates the high-level data flow and configuration steps.

Note

The diagram depicts the deployment components for the Azure Logic Apps SAP built-in connector.

Networking considerations:

If you prefer using the Azure Logic Apps SAP managed connector, then consider using Azure Express Route that permits peering of the Logic Apps Standard network with on-premises SAP deployment. The on-premises data gateway component isn't recommended as it leads to diminished security.

If the SAP HCM system is already running on Azure, the connection from Azure Logic Apps to the customers SAP system could be done in the same VNET (without the need for on-premises data gateway).

Step 1: Configure prerequisites in SAP HCM to use the Azure Logic Apps SAP built-in connector. This step includes setting up an SAP system account with appropriate authorizations to invoke BAPI function modules and IDoc messages. Complete the steps to set up and test sending IDocs from SAP to your logic app workflow.

Step 2: In Microsoft Entra, configure API-driven provisioning app to receive employee data from SAP HCM.

Step 3: Build a logic app workflow that is initiated with the trigger when message is received, process the IDocs message, build a SCIM payload and send the response to the Microsoft Entra provisioning API endpoint. As a best practice to stay within API-driven provisioning usage limits, we recommend batching multiple changes into a single SCIM bulk request rather than submitting one SCIM bulk request for each change.

- References:

- API-driven inbound provisioning with Azure Logic Apps

- References:

Step 4: Query the Entra ID provisioning logs endpoints to check the status of the provisioning operation. Record successful operations and retry failures.

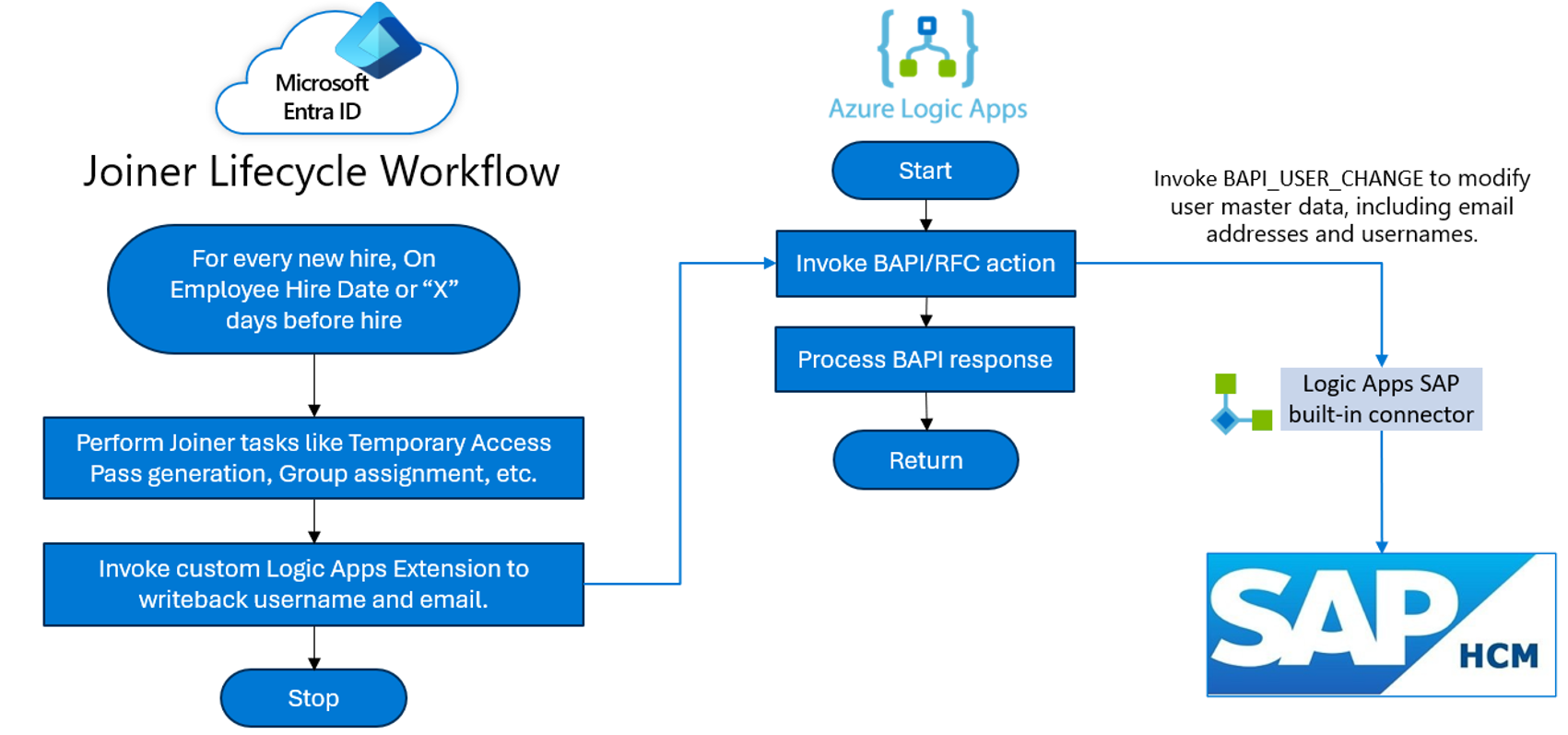

Configure Writeback to SAP HCM

After a worker record from SAP HCM is provisioned in Entra ID, there's often a business need to write back IT-managed attributes like email and username to SAP HCM.

We recommend using Microsoft Entra ID Governance -> Joiner Lifecycle Workflow with a custom Logic Apps extension for this scenario. The flow schematic is as shown in this diagram.

Follow these steps to configure writeback:

- Configure Joiner Lifecycle Workflow to trigger on-hire date.

- Configure a custom Logic Apps extension as part of the Joiner workflow.

- In this Logic Apps extension, call the

BAPI_USER_CHANGEfunction to update the user’s email address and username.

Acknowledgments

We thank the following partners for their help reviewing and contributing to this article: