Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Dataflow Gen2 supports Continuous Integration/Continuous Deployment (CI/CD) and Git integration. You can create, edit, and manage dataflows in a Git repository connected to your Fabric workspace. Use deployment pipelines to automate moving dataflows between workspaces. This article explains how to use these features in Fabric Data Factory.

Features

Dataflow Gen2 with CI/CD and Git integration offers a range of capabilities to streamline your workflow. Here's what you can do with these features:

- Integrate Git with Dataflow Gen2.

- Automate dataflow deployment between workspaces using deployment pipelines.

- Refresh and edit Dataflow Gen2 settings with Fabric tools.

- Create Dataflow Gen2 directly in a workspace folder.

- Use Public APIs (preview) to manage Dataflow Gen2 with CI/CD and Git integration.

Prerequisites

Before you start, make sure you:

- Have a Microsoft Fabric tenant account with an active subscription. Create an account for free.

- Use a Microsoft Fabric-enabled workspace.

- Enable Git integration for your workspace. Learn how to enable Git integration.

Create a Dataflow Gen2 with CI/CD and Git integration

Creating a Dataflow Gen2 with CI/CD and Git integration allows you to manage your dataflows efficiently within a connected Git repository. Follow these steps to get started:

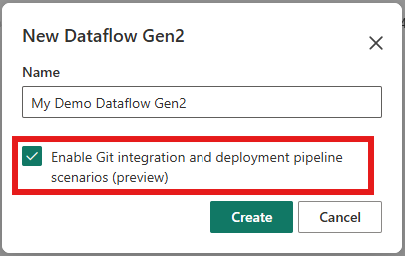

In the Fabric workspace, select Create new item, then select Dataflow Gen2.

Name your dataflow, enable Git integration, and select Create.

The dataflow opens in the authoring canvas, where you can start creating your dataflow.

When you're done, select Save and run.

After publishing, the dataflow shows an "uncommitted" status.

To commit the dataflow to Git, select the source control icon in the top-right corner.

Select the changes to commit, then select Commit.

Your Dataflow Gen2 with CI/CD and Git integration is ready. For best practices, see the Scenario 2 - Develop using another workspace tutorial.

Refresh a Dataflow Gen2

Refreshing a Dataflow Gen2 ensures your data is up-to-date. You can refresh manually or set up a schedule to automate the process.

Refresh now

In the Fabric workspace, select the ellipsis next to the dataflow.

Select Refresh now.

Schedule a refresh

In the Fabric workspace, select the ellipsis next to the dataflow.

Select Schedule.

On the schedule page, set the refresh frequency, start time, and end time. Apply changes.

To refresh immediately, select Refresh.

View refresh history and settings

Understanding the refresh history and managing settings helps you monitor and control your Dataflow Gen2. Here's how you can access these options.

To view refresh history, select the recent runs tab in the dropdown menu or go to the monitor hub and select the dataflow.

Access dataflow settings by selecting the ellipsis next to the dataflow and choosing Settings.

Save replaces publish

The save operation in Dataflow Gen2 with CI/CD and Git integration automatically publishes changes, simplifying the workflow.

Saving a Dataflow Gen2 automatically publishes changes. If you want to discard changes, select Discard changes when closing the editor.

Validation

When saving, the system checks if the dataflow is valid. If not, an error appears in the workspace view. Validation runs a "zero row" evaluation, which checks query schemas without returning rows. If a query's schema can't be determined within 10 minutes, the evaluation fails. If validation fails, the system uses the last saved version for refreshes.

Just-in-time publishing

Just-in-time publishing ensures your changes are available when needed. This section explains how the system handles publishing during refreshes and other operations.

Dataflow Gen2 uses an automated "just-in-time" publishing model. When you save a dataflow, changes are immediately available for the next refresh or execution. Syncing changes from Git or using deployment pipelines saves the updated dataflow in your workspace. The next refresh attempts to publish the latest saved version. If publishing fails, the error appears in the refresh history.

In some cases, the backend automatically republishes dataflows during refreshes to ensure compatibility with updates.

APIs are also available to refresh a dataflow without publishing or to manually trigger publishing.

Limitations and known issues

While Dataflow Gen2 with CI/CD and Git integration is powerful, there are some limitations and known issues to be aware of. Here's what you need to know.

- When you delete the last Dataflow Gen2 with CI/CD and Git support, the staging items become visible in the workspace and are safe to be deleted by the user.

- Workspace view doesn't show the following: Ongoing refresh indication, last refresh, next refresh, and refresh failure indication.

- When your dataflow fails to refresh we do not support automatically sending you a failure notification. As a workaround you can leverage the orchestration capabilities of pipelines.

- When branching out to another workspace, a Dataflow Gen2 refresh might fail with the message that the staging lakehouse couldn't be found. When this happens, create a new Dataflow Gen2 with CI/CD and Git support in the workspace to trigger the creation of the staging lakehouse. After this, all other dataflows in the workspace should start to function again.

- When you sync changes from GIT into the workspace or use deployment pipelines, you need to open the new or updated dataflow and save changes manually with the editor. This triggers a publish action in the background to allow the changes to be used during refresh of your dataflow. You can also use the on-demand Dataflow publish job API call to automate the publish operation.

- Power Automate connector for dataflows isn't working with the new Dataflow Gen2 with CI/CD and Git support.